A Bayesian Approach to Basketball

motivation

Bayesian inference is the process of using evidence (data) to update one’s prior beliefs about phenomena. The Bayesian approach can be especially useful in a field like sports, where we often don’t have large samples to work with (rendering frequentist approaches less effective). In this post, I’ll apply Bayesian principles in a basketball context.

prerequisites

This post assumes that the reader is familiar with probability theory and notation. I’ll provide a quick review, but I recommend reading up on Conditional Probability, Bayes’ Theorem, and the Law of Total Probability.

Conditional Probability

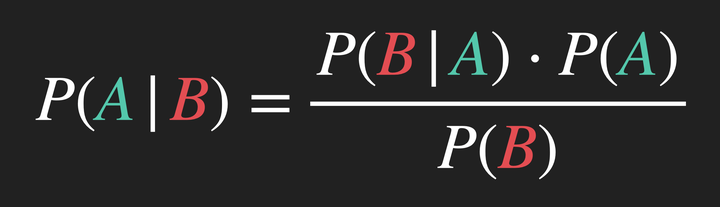

Bayes’ Theorem

Bayesian inference is centered around one equation: Bayes’ Theorem.

Law of Total Probability

bayesian inference applied to basketball

problem

Suppose a good 3-point shooter makes 35% of their 3-pointers from the top of the arc, and a bad one makes 15% of their 3-pointers from the top of the arc.

We want to know if Christina is a good 3-point shooter. Before she shoots, we think that there is a 20% chance of her being a good 3-point shooter. Christina takes three 3-point shots from the top of the arc, and makes all three.

- What is the probability that Christina is a good 3-point shooter?

- How many 3-point shots from the top of the arc would Christina have to make in a row for us to believe there’s at least a 95% chance Christina is a good 3-point shooter?

solution

> part 1.

Based on the problem statement, we want to update our belief about Christina being a good 3-point shooter for each shot that she makes in a row. So,

From the problem statement:

Let’s look at our Bayesian update after Christina makes her first shot:

Here’s our posterior probability after Christina makes her first shot:

We can now update our prior probability:

We can apply the same iterative update procedure to find the posterior probabilities after Christina’s second and third made shots, but first, let’s see if we can simplify our equation a bit. We’ll start by examining the equation for the posterior after Christina’s second make:

So:

Now, we have:

The posterior probability after Christina makes her third shot in a row:

> part 2.

We can write a simple loop to figure out how many makes in a row it would take for us to believe there’s at least a 95% chance Christina is a good 3-point shooter:

# clear environment

rm(list=ls())

# variables

p_h <- 0.2 # prior probability

p_e_given_h <- 0.35 # probability of making shot given good shooter

p_e_given_not_h <- 0.15 # probability of making shot given bad shooter

posterior <- 0

thresh <- 0.95

i <- 0

# loop through shots until threshold is breached

while (posterior < thresh) {

# update shot iteration

i <- i + 1

# find posterior probability and update the prior

posterior <- (p_e_given_h^i * p_h) / (p_e_given_h^i * p_h + p_e_given_not_h^i * (1-p_h))

bayes_update <- glue::glue(

"shots made in a row: {i} \nposterior probability: {posterior}\n\n"

)

print(bayes_update)

}## shots made in a row: 1

## posterior probability: 0.368421052631579

##

## shots made in a row: 2

## posterior probability: 0.576470588235294

##

## shots made in a row: 3

## posterior probability: 0.760532150776053

##

## shots made in a row: 4

## posterior probability: 0.881100917431193

##

## shots made in a row: 5

## posterior probability: 0.945328758647843

##

## shots made in a row: 6

## posterior probability: 0.975813876332269Christina would have to make 6 shots in a row from the top of the arc for us to believe that there’s a 95% chance at minimum that she is a good 3-point shooter.

> an alternate approach

Let’s try tackling this problem from a different perspective. We’ll start by asking “what’s the probability that a good shooter will make

We then have:

wrapping things up

In this post, we worked through a problem dealing with probability in a sports context using two different methods, and we saw that both methods resulted in the same answer. That’s the beauty of math – there’s rarely ever one sole path to the solution. This was meant to be a straightforward use of Bayesian inference to solve a simple problem, but resulted in a pretty lengthy post. For those of you who stuck it out with me, I hope you were able to follow along and that you found the process of solving this problem as rewarding as I did. Thanks for reading!